So, it won’t automatically load the file, since it relies on the “Blob Created” Event to trigger itself, but that event happened before it was created. The emp.csv file had been there in the Blob Container before we created the Snowpipe. Now, you’ll be able to show the pipe that you’ve just created. Then, create the Snowpipe using the following SQL command CREATE OR REPLACE pipe "SNOWPIPE_DEMO"."PUBLIC"."DEMO_PIPE" auto_ingest = true integration = 'SNOWPIPE_DEMO_EVENT' as copy into "SNOWPIPE_DEMO"."PUBLIC"."EMPLOYEE" from file_format = (type = 'CSV') CREATE OR REPLACE TABLE "SNOWPIPE_DEMO"."PUBLIC"."EMPLOYEE" ( id STRING, name STRING ) Let’s firstly create a table as the destination of the Snowpipe. Now we reach the final stage, creating the Snowpipe. You should be able to see the file in the result pane. Then, run the following command in Snowflake.

Permission granting notice should be given by Azure, click Accept button. Copy and paste it in your browser to log in to your Azure account as you usually do. In the result pane, you’ll see the AZURE_CONSENT_URL property, and the login URL is accessible on the property_value column. Let’s firstly run the following SQL command in Snowflake: DESC notification integration SNOWPIPE_DEMO_EVENT By default, for security purposes, we should never let our Azure Storage Account for staging our data be accessible publicly, of course. Now, Snowflake knows where to go to Azure to get the notification (Azure Events), but we still need to let Azure authenticate our Snowflake account. Tips: Once done, you can run show integrations command to retrieve all the Integrations in your Snowflake account Authentication of Snowflake Application to Azure Note: It is highly recommended to use ALL UPPER CASES when defining the Integration names, to avoid case sensitivity in some Snowflake scenarios. create notification integration SNOWPIPE_DEMO_EVENT enabled = true type = queue notification_provider = azure_storage_queue azure_storage_queue_primary_uri = ' ' azure_tenant_id = ''

To create an Integration in Snowflake, you’ll need to be an Account Admin. You may choose a region that is identical/close to your Snowflake region for the best performance. Then, input the name for this resource group. On your Azure Portal, navigate to Resource groups and click Add. To begin with, let’s create a resource group to organise the storage accounts that are going to be built for Snowpipe.

AZURE DATA STUDIO CONNECT TO SNOWFLAKE HOW TO

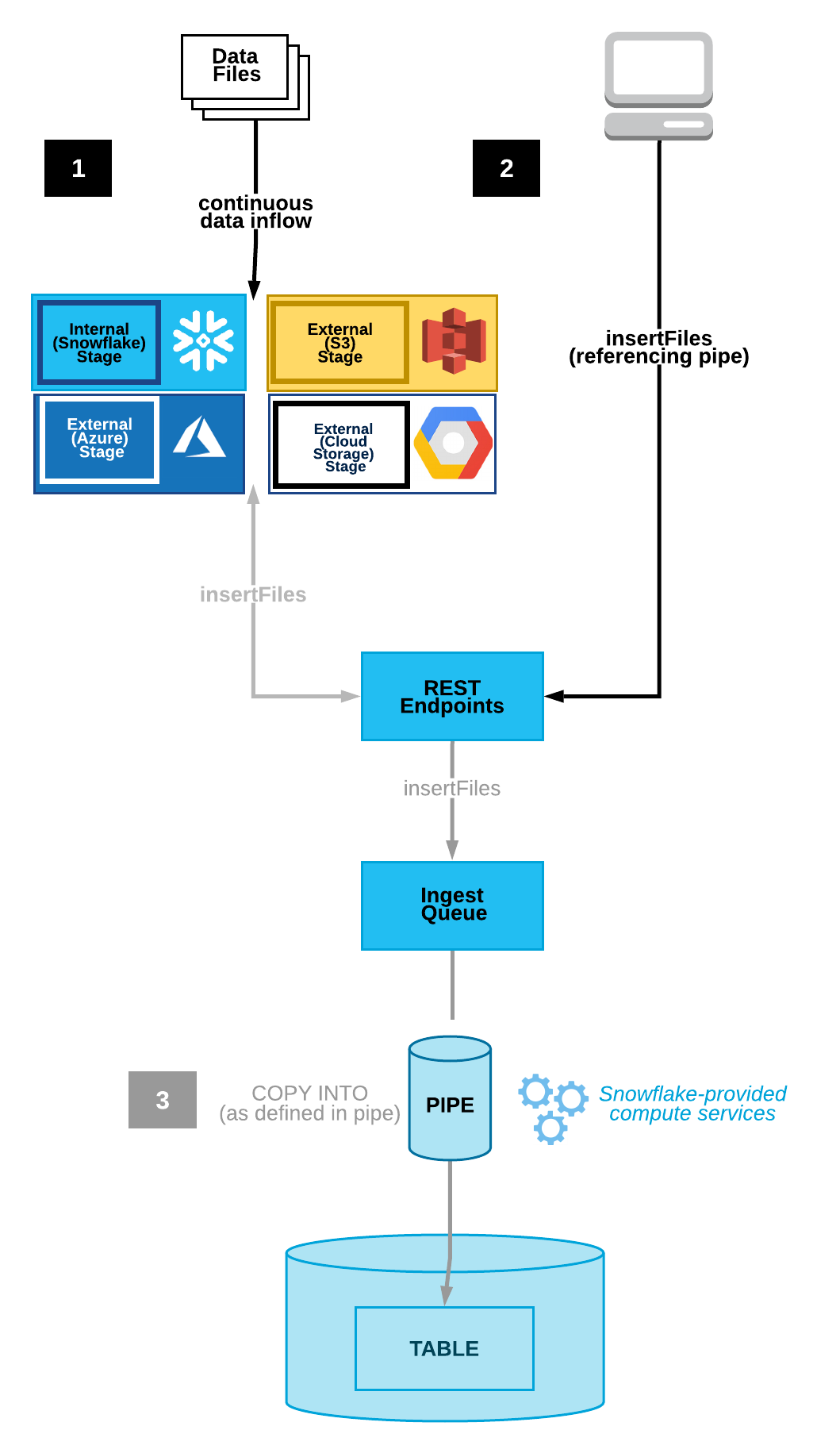

In this tutorial, I’ll introduce how to build a Snowpipe using the Azure Portal, which is the Web-based UI of Azure, which I believe will give you better intuition about how the Snowpipe works. In other words, this tutorial tells you how to build a Snowpipe, but difficult to let you understand. Even though they can build the Snowpipe following these commands, it is still might be agnostics for the overall architecture and data flow. In my opinion, this is not intuitive enough especially for new users and the people who do not have enough knowledge of Azure. However, this article uses Azure CLI (command line interface) to build the cloud storage component and the Azure Event Subscription. In the official documentation, you’ll find a nice tutorial:Īutomating Snowpipe for Azure Blob Storage Azure Blob Storage, Amazon S3) and use the “COPY INTO” SQL command to load the data into a Snowflake table. It is able to monitor and automatically pick up flat files from cloud storage (e.g. Snowpipe is a built-in data ingestion mechanism of Snowflake Data Warehouse. Building Snowpipe on Azure Blob Storage Using Azure Portal Web UI for Snowflake Data Warehouse

0 kommentar(er)

0 kommentar(er)